Web Development & Software Engineering

From next-gen startups to established enterprises.

Design

The best custom software development companies include the best UX design. The best UX starts with strategic planning. By aligning our digital transformation solutions with your vision and goals, we become a true partner, starting at UX/UI design.

Services

We provide support for all of your custom software development projects.

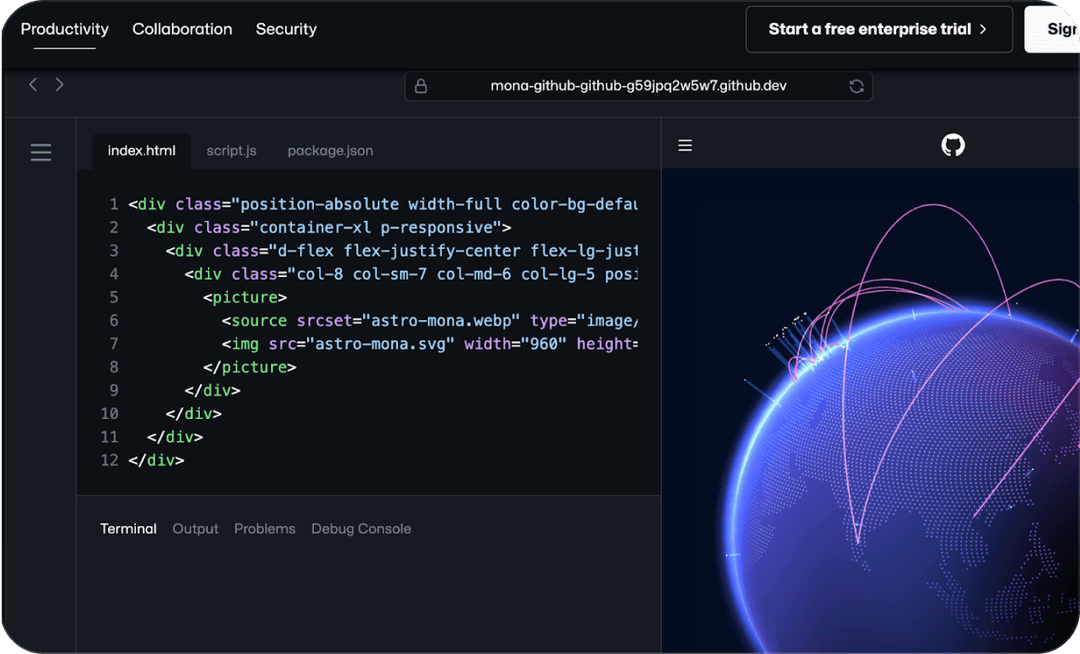

HTML

CSS

JavaScript

Frameworks

The best software development firms start their web development projects at the design phase.

React.js

Next.js

Webflow

Shopify

DEV.co design process

Website visitors who land on your site will be at varying points in the customer journey.

Goal Identification

Research & Discovery

Prototyping

Testing & Review

Why invest

When users can find what they came for, they’ll develop a positive impression of your brand.

Influence visitor behavior

Eliminate barriers to conversion

Deliver a branded experience online

Clear calls to action

Develop

From product design mock-ups & MVP sprints to product iteration & QA (quality assurance) testing, our expert project management team and software development services have you covered.

Services

We provide support for all of your custom software development projects.

Node.js

Laravel

PHP

Backend Development

Our full-stack dedicated development team provides the front-end and back-end support your software projects deserve.

Python

C#/.NET

SQL/SQL Server

Oracle

Why invest

When users can find what they came for, they’ll develop a positive impression of your brand.

Influence visitor behavior

Eliminate barriers to conversion

Deliver a branded experience online

Clear calls to action

Deploy

Delivery of a product ready for “prime time” also means you have the custom software development support team to shepherd you through training, upgrades and feature add-ons. Software deployment is only the beginning of a journey.

Services

We provide support for all of your custom software development projects.

Node.js

Laravel

PHP

Deployment for the Web

Our full-stack dedicated development team provides the front-end and back-end support your software projects deserve.

React.js

Next.js

Webflow

Shopify

Innovative Software

Development Services

Our Services

At DEV.co, we provide full-stack custom software development solutions to businesses small and large.

As a custom software dev agency, we cover the full breadth of custom solutions by developing websites, mobile apps, custom portals, billing solutions, artificial intelligence, SaaS, big data, and other cloud-based applications.

12+ years as a software development company

For over a decade, we have developed a proven, repeatable system for delivering quality code. We provide solutions across the software development life cycle, ensuring we out-deliver above other custom software development firms on the market.

Mobile Application w/3M+ downloads

Built for Android & Apple iOS

Fortune 100 Retailer Manages $1B+ in Sales

Our experienced, dedicated team provides the deep end-to-end engineering talent and knowledge of programming languages to deliver the right data analytics, strategic roadmap, and maintenance services required to complete your entire project at or below project cost.

Strategic software development support

As an end-to-end service provider, we deliver in-depth strategic support and custom software developer services to corporations, government entities and small/medium businesses across the globe, ensuring your digital success.

We aim to deliver on time and under budget

Our experienced, dedicated team provides the deep end-to-end engineering talent and knowledge of programming languages to deliver the right data analytics, strategic roadmap and maintenance services required to complete your entire project at or below project cost.

Our Web

Development Clients

Our custom dev services are trusted software companies, small businesses, and Fortune 500 companies alike.

0

Website Development

Our web development team builds dozens of websites monthly, integrating the right technology stack with cross platform applications for the best possible user experience to your web apps.

0

Mobile App Development

Over 150 custom developed, including one mobile app (with 3M+ downloads) for Android & iOS

0

Custom Platform Development

Custom developed corporate and customer-facing platform projects, including custom integrated analytics for analyzing site usage for big data consulting.

A Custom Software Development

Company Engineering

Innovation

We only engage the top 1% of vetted software engineering talent. Our software engineers and custom software project developers come fully-vetted from years of active project-based experience, including deliverables and glowing reviews from past client engagements. We also utilize a proven candidate filtration process for sifting through thousands of active software developers worldwide, solidifying on those with the ample experience across the tech stack using live projects, engineering exams, coding challenge reports and pre-hire interviews providing you best talent available. In this way, we work as a software development partner and not just a vendor.

Whether you need temporary staffing support for a small project or a complete, dedicated team managed by senior developers for a dedicated, long-term engagement with cross platform applications, our IT and software development staffing solutions are there to assist throughout the process. We use sophisticated artificial intelligence (AI) in sourcing the best software engineering talent for your custom development projects, ensuring a precise match between the engineering candidates' industry experience and previous performance and your unique project requirements.

Depending on the nature and complexity of your project, when you work with a dev team, you engage with the right software development team for your project or staffing needs. Regardless of your software project's complexity, our engineering professionals will seamlessly integrate with your in-house team to provide a custom roadmap and delivery timeline for your project's ultimate success. This value-added approach ensures you are set up for ultimate software development success from day one and that you receive the support you need for your custom product, ensuring you deliver on your project goals.

Software Development Staffing: A Partnership

At DEV.co, we see software development and IT staffing as a partnership. It’s our job to help you achieve your goals, whatever they may be. If you’re interested in learning more about our staffing process, or if you’re ready for a free quote on our IT staffing services, contact us today!

FAQs

Frequently Asked

Questions

Here are some of the most common questions regarding our software dev services

Software development is a process that can be used to create custom software for a customer or client. This process can be used to create custom applications, middleware, or even operating systems. A software development company can provide these services to businesses of all sizes, from small businesses to large enterprise organizations. Software development is a process that can be used to create custom software for a customer or client. This process can be used to create custom applications, middleware, or even operating systems. A software development company can provide these services to businesses of all sizes, from small businesses to large enterprise organizations.

When choosing a custom software development company, it is important to consider the company's experience and expertise. The company should have a good reputation and be able to provide references. It is also important to consider the cost of the services, and make sure that the company has a good track record of completing projects on time and on budget.

The best software development companies have a number of characteristics in common, including:

- They have a lot of experience and expertise in software development

- They have a good reputation and are able to provide references

- They are able to complete projects on time and on budget

- They have a team of skilled developers who are able to meet the needs of the customer or client

- They use the latest tools and technologies

- They have a strong understanding of the software development process

- They are able to provide support and maintenance after the software has been deployed

What questions should you ask your software development company before hiring them?

- What is your experience and expertise in software development?

- Do you have a good reputation and are able to provide references?

- Can you complete projects on time and on budget?

- Do you have a team of skilled developers who are able to meet the needs of the customer or client?

- What tools and technologies do you use?

- Do you have a strong understanding of the software development process?

- What kind of support and maintenance can you provide after the software has been deployed?

The agile software development process consists of the following five stages:

- Initiation: This stage includes gathering requirements from the customer or client, and designing the software.

- Planning: In this stage, the development team creates a plan for developing the software. This includes creating a schedule and budget, and determining which features will be included in each stage of the project.

- Execution: In this stage, the development team writes code and tests it to make sure it meets all of the requirements.

- Delivery: In this stage, the software is delivered to the customer or client for testing.

- Feedback: In this stage, the customer or client provides feedback about the software, and the development team makes changes based on that feedback.

How does agile software development differ from other methodologies?

- Agile development is a process that is used to create custom software for a customer or client. It is a collaborative process that involves regular communication between the customer or client and the development team. Agile software development is based on the principle of iterative and incremental development, which means that the software is developed in stages, and new features are added after each stage has been completed. This allows the customer or client to see a working prototype of the software and provide feedback.

- At any given time, we are using various software development tools to fully implement agile software development methodologies.

Custom software development services can vary in price, depending on the size and complexity of the project. However, most companies charge by the hour, with rates that range from $75 to $250 per hour. Some companies also charge a fixed price for the project, which is determined by the scope of work. Contact us today for a quote on your next project!

When considering the cost of software development services, there are several factors to take into account:

- The size and complexity of the project. The larger and more complex the project, the higher the cost of the services.

- The development team's experience and expertise. More experienced and expert developers will charge more for their services.

- The company's overhead costs. Companies with higher overhead costs, such as larger companies or those located in major metropolitan areas, will charge more for their services.

- The type of project. Some projects, such as those that require a lot of custom coding, will be more expensive than others.

- The development methodologies used. Agile software development is a more expensive methodology than traditional waterfall development.

- The number of hours required to complete the project. The more hours required, the higher the cost of the services.

- The level of experience and expertise of the development team. More experienced and skilled developers will charge more for their services.

- The geographical location of the development team. Development teams in countries such as India or China typically charge less than teams in North America or Europe.

Increased efficiency

- Custom software is designed to meet the specific needs of a business, which can help to increase efficiency and productivity.

Improved customer satisfaction

- By providing a software solution that is tailored to the specific needs of a customer or client, businesses can improve customer satisfaction levels.

Cost savings

- By avoiding the need to purchase off-the-shelf software, businesses can save money on the cost of development and maintenance.

Custom software development using the agile method typically takes between 4 and 6 months, but can take longer depending on the complexity of the project.